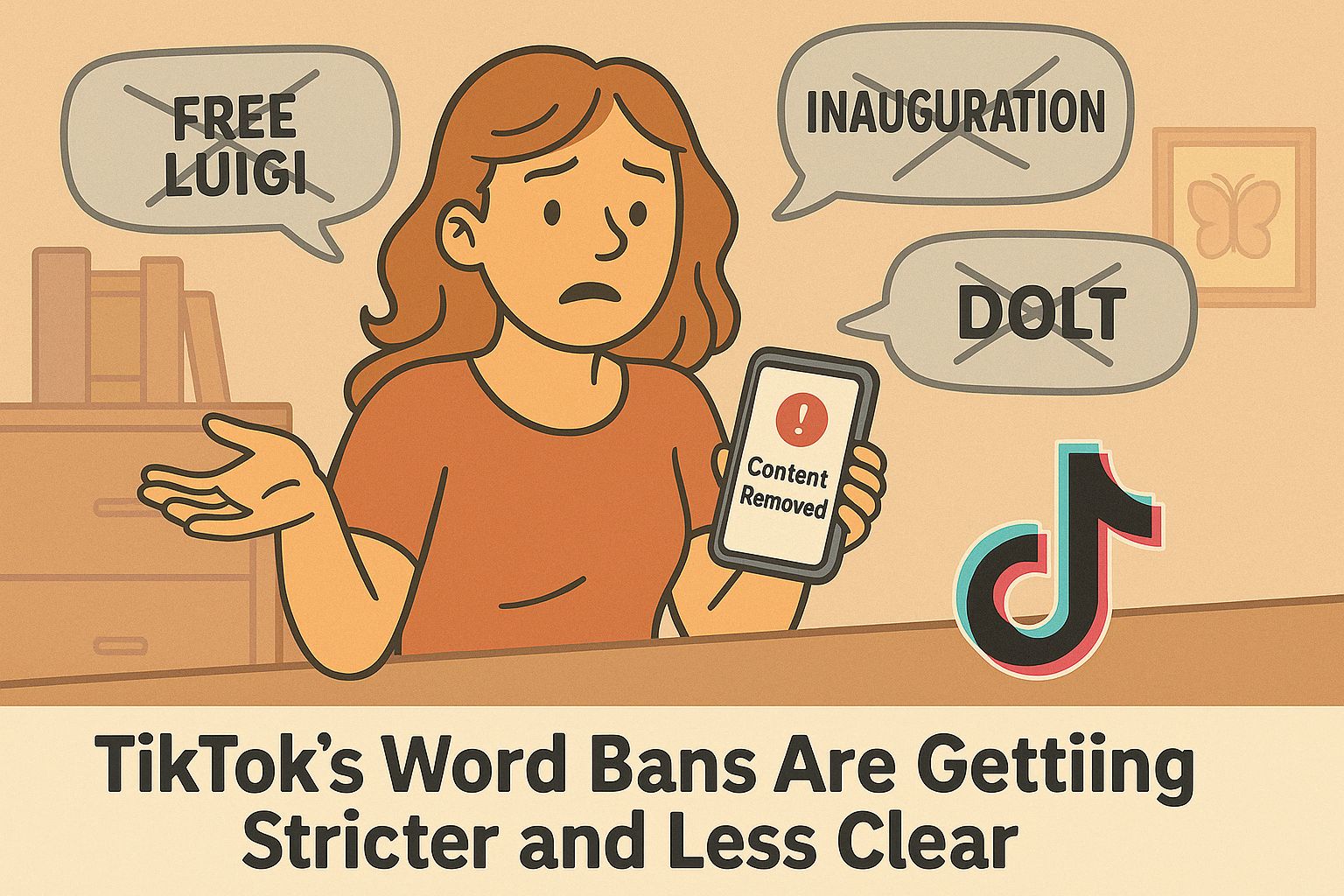

TikTok’s Word Bans Are Getting Weirder and Nobody Knows Why

Lately, saying the wrong word on TikTok—even if it’s harmless or completely unrelated to current events—can get your content flagged, removed, or worse… earn your account a strike.

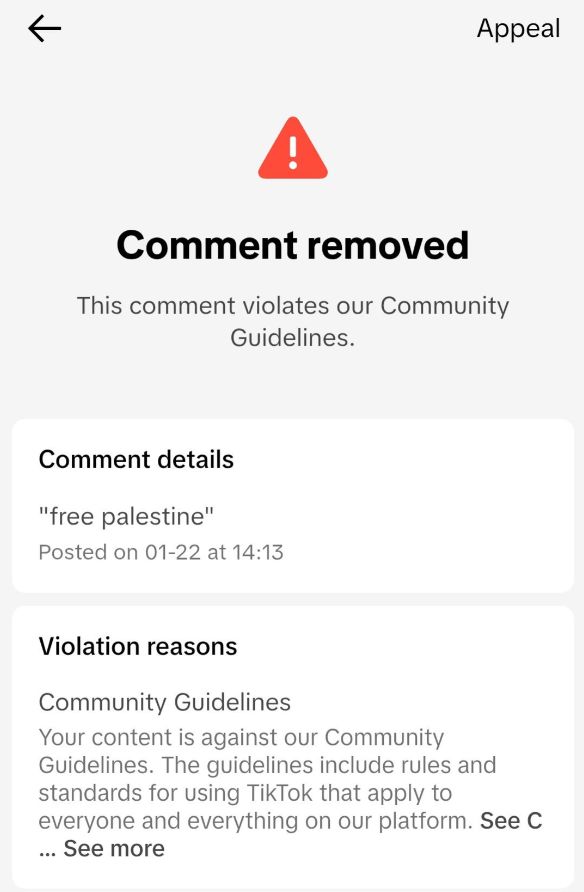

Creators are reporting violations for words like “Free Palestine,” “Free Luigi,” “inauguration,” “dolt,” “racist,” and even everyday teasing in comments between friends. At the same time, blatantly hateful comments and slurs are somehow passing moderation untouched.

It’s left people confused, anxious, and fed up with an algorithm that seems more focused on silencing phrases than stopping actual harm.

In this article, you’ll learn:

-

What kinds of words are reportedly getting flagged or removed

-

Why TikTok moderation feels more aggressive and inconsistent

-

How the current system favors over-filtering while missing actual hate speech

-

Why creators feel like they’re walking on eggshells

-

What you can do to avoid unintentional strikes

-

Whether alternatives like Rednote are actually worth exploring

What People Are Getting Flagged for Now

There’s always been a list of “don’t say that” terms on TikTok—but lately, it feels like the list has tripled overnight, and nobody got the memo.

Users are getting flagged for saying things like:

-

“Free Palestine”

-

“Free Luigi” (yes, seriously)

-

“Inauguration”

-

Mild insults like “dolt” or “dense”

-

Descriptions of political events

-

Even harmless flirting or tone tags like “/j” (joking)

At the same time, deeply offensive comments—slurs, hate symbols, and direct threats—are being reported and somehow not removed.

It’s not just frustrating. It feels upside down. The stuff getting silenced isn’t necessarily dangerous, while the stuff that is dangerous gets shrugged off by moderation bots or vague rejection messages like “no violation found.”

Why TikTok Moderation Feels Like It’s Suddenly in Overdrive

Moderation has always been part of TikTok, but something shifted. Comments and videos that were fine a month ago are now vanishing. Some users are getting auto-muted mid-livestreams. Others are watching their comment history disappear line by line—even for stuff they posted years ago.

This isn’t coming from random users mass-reporting content (though that does happen). It looks like TikTok has either:

-

Tweaked its algorithm

-

Rolled out stricter AI-based moderation

-

Or quietly introduced new internal “banned term” policies without telling anyone

The platform never explains which rule you broke. You just get a generic strike or takedown, leaving creators to guess what word triggered it. The result? Everyone’s tiptoeing around their own vocabulary and hoping TikTok doesn’t misinterpret their joke, their opinion—or their autocorrect.

The Real Problem: Over-Filtering Without Stopping Real Harm

If the system worked equally across the board, creators might grumble but move on. But that’s not what’s happening.

People are watching their content get flagged for the word “foolish” while literal hate speech—including slurs and Nazi references—is being reported and left untouched. There are even stories of videos being edited by TikTok itself to remove flagged words, or comment sections being scrubbed mid-discussion for saying something like “dense.”

Meanwhile, users are documenting blatant antisemitism, racism, and calls for violence that don’t violate community guidelines, at least according to TikTok’s review system.

It’s left many creators wondering: is moderation just broken? Or is it selectively broken?

Why Creators Feel Like They’re Being Silenced for Nothing

It’s one thing to get flagged for a mistake. It’s another to get flagged just for… talking.

That’s what’s got so many people feeling anxious right now. Creators are reporting:

-

Getting violations for harmless words or inside jokes

-

Being too nervous to comment on their own videos

-

Going back through old posts to delete anything that might trigger moderation

-

Watching their friends get strikes for saying things like “that was silly” or “well, duh”

And the weirdest part? TikTok doesn’t tell you what to avoid. There’s no updated list of banned words, no clear community explanation, and definitely no nuance in how the moderation bots are scanning language.

So people are starting to censor themselves—or quit the app entirely—not because they broke the rules, but because they’re scared of breaking ones they can’t see.

How to Avoid Getting Flagged Without Losing Your Voice

First, let’s be honest: there’s no perfect formula for avoiding TikTok’s moderation system. It flags things inconsistently and often without warning. But you can reduce your chances of getting hit by:

-

Rephrasing sensitive topics using coded or symbolic language

-

Avoiding sarcasm or tone that might be misread by moderation bots

-

Using emojis and indirect phrasing sparingly (even and have caused issues for some users)

-

Staying away from hot-button political terms unless you’re prepared for review

-

Keeping copies of your content off-platform in case it gets removed

Personally, I’ve been using Systeme IO as more than just an email tool—it’s where I back up drafts, captions, and post ideas before I even touch the TikTok app. If a video gets flagged, at least I still have the original to work with.

And if you do get flagged? Appeal it. TikTok’s appeal system isn’t perfect, but creators have had success reversing strikes, especially when the original “violation” was clearly a false positive.

Still, this whole process is exhausting—and it’s making creators feel like they have to outsmart the algorithm just to say anything at all.

Should You Stick Around or Find Another Platform?

Some people are already jumping ship. Apps like Rednote are getting name-dropped as alternatives, with promises of fewer filters, more transparency, and a community that doesn’t feel like it’s walking on broken glass.

But let’s be real: TikTok still has the reach, the tools, and the momentum.

So unless a full migration happens (like what we saw when people started ditching Twitter), most creators are sticking it out for now. What is shifting, though, is the tone—creators are getting more cautious, more creative with how they communicate, and more frustrated that they even have to.

And if TikTok doesn’t address the gap between what it censors and what it allows? That trust may not last much longer.

TikTok’s Word Bans Are Silencing the Wrong Voices

It’s not just that moderation has gotten stricter—it’s that it feels random and misplaced.

Harmless words are being flagged. Creators are scared to speak. Meanwhile, the genuinely harmful stuff is sometimes left untouched.

Whether it’s a bot gone rogue, an algorithm update no one asked for, or something deeper under the hood, the end result is the same: creators don’t feel safe expressing themselves anymore—not because they’re doing anything wrong, but because they don’t know what’s “wrong” until it’s too late.

And that’s not a healthy space to create in.

If TikTok wants to keep the trust of its users, it needs to offer more clarity, better moderation, and a whole lot more consistency. Because right now, it’s not censorship that’s silencing people—it’s confusion.