TikTok Is Banning Users Over Harmless Comments

Something’s broken at TikTok.

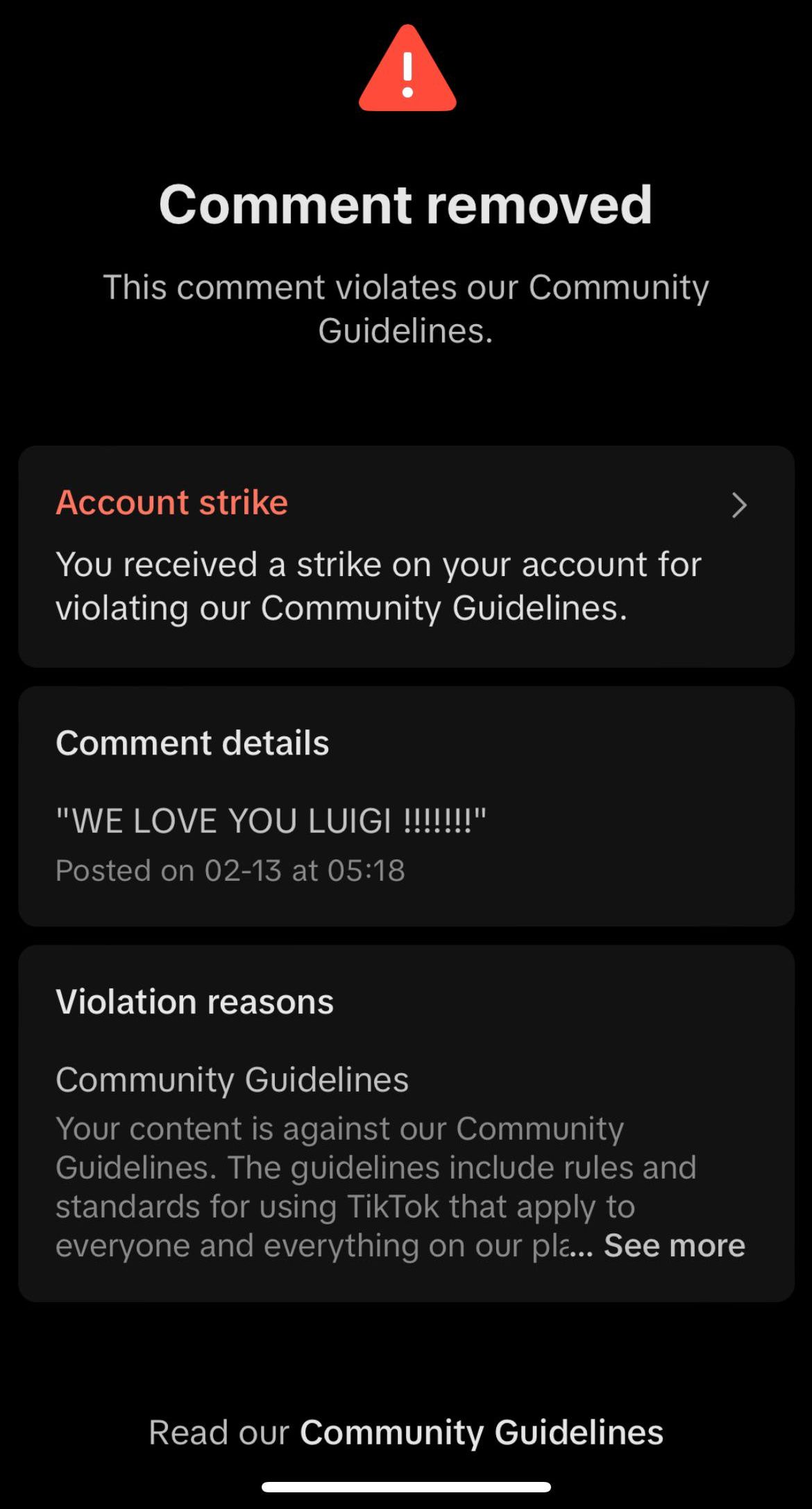

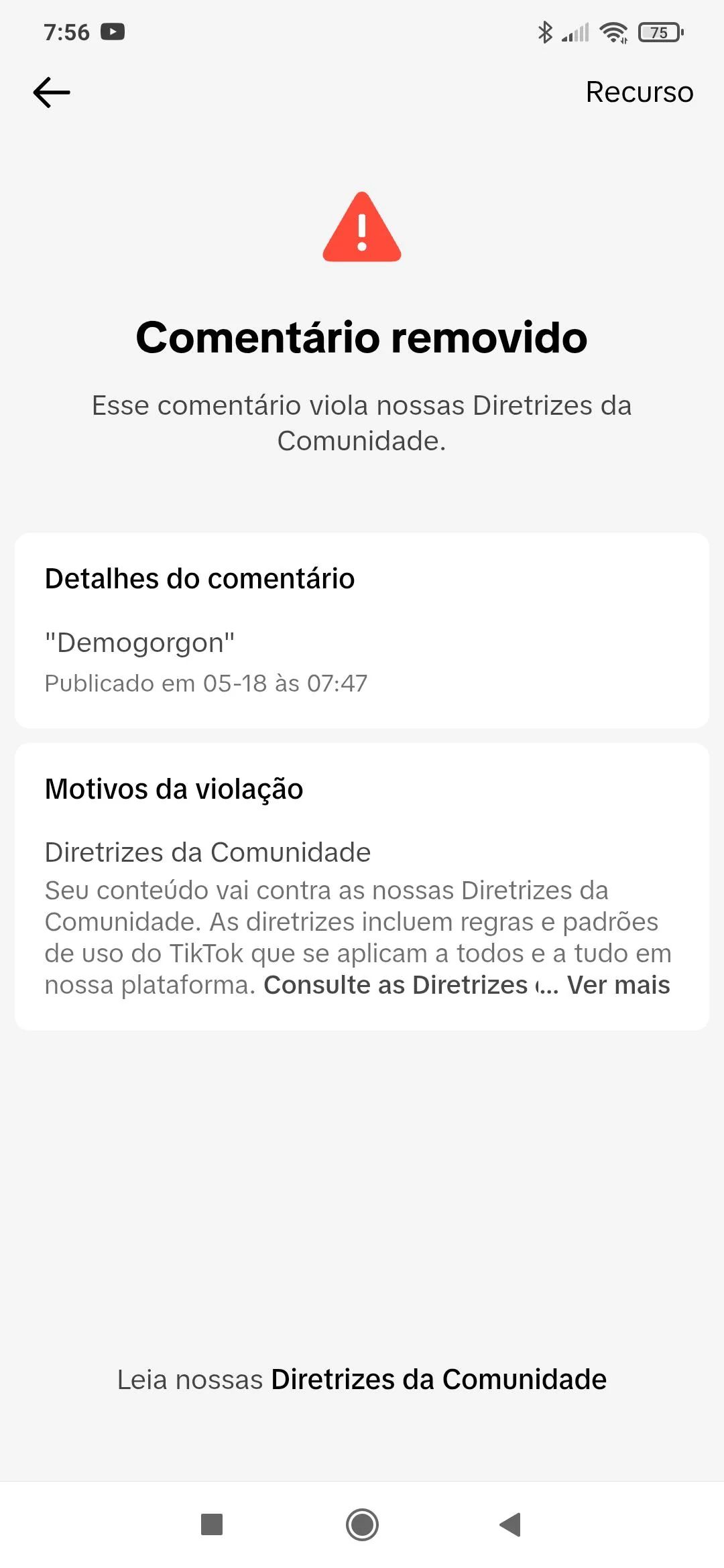

People are getting hit with comment removals, account strikes, and even permanent bans over the most innocent messages imaginable. Stuff like:

-

“WE LOVE YOU LUIGI !!!!!!!!”

-

“Demogorgon”

-

A vomiting emoji

-

And even “how”

No context. No hate speech. No threats. Just ordinary words or harmless reactions.

Meanwhile, users keep reporting actual racism, misogyny, and dangerous content that somehow passes moderation untouched.

This isn’t just one person’s story. A 6-year-old account with millions of likes and years of memories got wiped overnight — no appeal, no explanation, just “this violates our Community Guidelines.”

The platform is glitching out or clamping down. Maybe both.

And if you rely on TikTok for your content, your community, or your income — this should worry you.

In this article, I’ll break down:

-

Why harmless comments are triggering bans

-

The role AI moderation might be playing

-

What users are saying about shadow rules and hidden keywords

-

How this affects creators, casual users, and even big accounts

-

What you can do to protect your content going forward

The Strikes Make No Sense — and That’s the Problem

The bans aren’t coming from hate speech or rule-breaking. They’re coming from totally normal comments.

One user got flagged just for saying “how.” Another got a strike over the word “dumb.” Someone else typed “Demogorgon” under a video where the character vaguely resembled one — that was enough to trigger removal.

Even emojis aren’t safe.

The emoji — removed.

The emoji — often flagged.

The word “Eww” — taken down for “violating guidelines.”

At this point, people are scared to comment anything. One user said they stopped commenting entirely after getting a strike for posting on a photo of a spoon.

This isn’t content moderation — it’s chaos.

And it’s not just comments. These strikes are stacking up behind the scenes. You don’t even know how many you’ve racked up until TikTok bans you permanently. No warning. Just a message saying the ban is final and your content can’t be restored.

When users appeal, they get generic replies like:

“Your content violates our Community Guidelines and cannot be restored.”

But no one explains what the actual violation was. No timestamps. No context. No chance to learn and adjust.

And in the background, TikTok’s automation keeps sweeping people off the platform.

AI Moderation Is Likely Driving the Crackdowns

There’s a pattern here — and it looks automated.

TikTok moderators aren’t reviewing every comment by hand. Most of the removals seem to happen within seconds. That points to AI-based moderation.

But the AI isn’t working the way it should.

It’s flagging:

-

Neutral words out of context

-

Pop culture references like “Demogorgon”

-

Emotions like “eww,” “gross,” or

-

Anything that might even slightly be misread as offensive

Meanwhile, clear violations — hate speech, slurs, threats — stay up. Users report them, and TikTok replies: “No violation found.”

This mismatch has people convinced that TikTok’s AI has gone rogue.

It doesn’t understand nuance. It doesn’t understand sarcasm. It can’t tell if “ugly” is an insult or someone defending another user by saying “Calling her ugly is a lot!”

One user pointed this out directly:

“They deleted a comment where I’m defending someone and I said: ‘Calling her ugly is a lot.’”

Removed for “inappropriate content.”

And let’s not forget TikTok’s blackout earlier this year, when the app went dark for hours. Since then, users say the algorithm has gotten way more aggressive. It coincides with reports of TikTok moving infrastructure — possibly tying moderation even more tightly to AI-driven enforcement.

If your comment even slightly trips a keyword filter, you’re at risk.

Comments Stay Up — But Only If They’re Actually Offensive?

Here’s the real kicker:

While harmless comments keep getting flagged and accounts are being nuked for saying “dumb” or “how,” the worst kinds of comments often stay untouched.

Multiple users reported seeing:

-

People typing out racial slurs — including the N-word — with no action taken

-

Open praise for mass shooters

-

Nazi symbols and swastikas

-

Gooning content left live on the For You page

-

Extreme misogyny and hate speech in livestream chats

They report it. TikTok reviews it. Then they get the message:

“No violation found.”

It’s infuriating. One person said they had their account banned after referencing Luigi, while the app suggested videos that involved a girl gooning in a mirror — from a show apparently called Pen15.

Another said their entire account was removed after years of small, harmless infractions, while offensive content targeting entire communities continues to thrive.

The message is clear: TikTok’s moderation isn’t about safety. It’s about keyword triggers and algorithmic surface scans. If the system doesn’t catch your comment based on a pre-set filter — it slides.

If it does? Even if it’s a compliment or a pop culture reference — you’re done.

Creators Are Getting Wiped Out Overnight

The original post that inspired this article came from a creator who lost everything.

They’d been on TikTok since 2019. Over 16,000 followers. Millions of likes. Years of videos — including personal content they can never replace, like:

-

Drafts never published

-

Videos with old coworkers

-

A clip of their dog that passed away

All gone.

No warning. No appeal. Just a canned message saying their account was permanently banned and nothing could be restored.

And this isn’t a one-off. Creators in the replies reported similar stories:

-

Someone with hundreds of thousands of followers banned in an instant

-

Another user banned simply for saying “ofc I’m cruel to racists”

-

Many creators now running burner accounts to post or comment without losing their main profiles

The fear is real. TikTok is treating everyone like they’re disposable.

Even those with massive followings, years of content, and clean records are one flagged comment away from deletion.

And if you try to fight it?

Expect generic responses, appeals that go nowhere, and zero transparency from the platform.

Shadow Rules and Keyword Traps Are Everywhere

TikTok doesn’t publish a full list of banned phrases, but based on what users are sharing, it’s obvious there’s an invisible blacklist.

Words like:

-

“Ugly” (even in defense of someone)

-

“Eww” or “Gross”

-

“Knob” (used jokingly in British slang)

-

“How” (literally just the word)

-

References to Stranger Things (like “Demogorgon”)

-

The clown emoji

-

Political phrases — even vague ones

None of these are offensive on their own. But they get flagged anyway.

One theory is that TikTok uses an aggressive AI filter trained on sensitive keywords without nuance or context. Another theory is that recent server changes shifted moderation thresholds — possibly separating U.S. and global data enforcement.

There was even a 16-hour TikTok blackout earlier this year that some users believe was a major backend change. Since then, keyword moderation has been worse, and search results now vary drastically between the U.S. and other regions.

People are now testing comments in throwaway accounts just to see what gets flagged. That’s where we’re at.

You can’t even trust an emoji anymore.

Commenting on TikTok? You’re Risking Everything

The platform’s own users are now warning each other:

“If you value your account, stop commenting.”

That’s what it’s come to.

One user said they comment only from burner accounts. Another shared they were permanently banned just for replying “boo” or “lol” under random posts.

And this isn’t just paranoia.

Even “creator” accounts — with large followings and brand deals — are getting wiped for harmless replies. TikTok doesn’t care how big you are. If the AI flags your comment, that’s it.

The community is starting to realize that interaction is dangerous.

But that breaks the whole point of social media.

As one user put it:

“The entire point of social media is to interact with people.”

Now people are afraid to say anything at all.

Others are quitting the app completely, saying they’ve moved to platforms like YouTube, where moderation is more consistent and monetization is still possible.

And when creators start walking, the platform loses the content that made it worth opening in the first place.

Why TikTok Isn’t Saying Anything

The silence from TikTok is making it worse.

People file appeal after appeal and get nothing but vague rejections. They contact support and are met with bot responses. They try feedback forms and either:

-

Can’t find them

-

Can’t access them

-

Or get responses that make no sense

One user said they were locked out of feedback forms completely — even in incognito mode, even from new devices. Another tried contacting TikTok support 200 times just to get a human.

Still nothing.

The company offers no explanation, no guidance, no way to tell what comment triggered the strike. There’s no published list of forbidden words or warning thresholds. You’re just supposed to know — and if you guess wrong, you’re gone.

For an app that runs on user content, it’s shocking how little regard it has for the users themselves.

Even the idea of “free speech” on TikTok is now laughable. Criticism of powerful figures, political statements, and community defense are all being flagged — while offensive content still finds a home.

Where Do Users Go From Here?

Right now, there’s no good answer.

If you post content on TikTok — or even just comment on others — you’re at risk. The safest users are the ones who don’t engage at all, which defeats the purpose of the app.

Some people are walking away entirely. Others are moving to:

-

YouTube Shorts — where creators feel they have more monetization options and fewer random takedowns

-

Instagram Reels — despite Meta’s own moderation issues, it’s often more predictable

-

Burner accounts — just to comment freely without risking their main profile

And a growing number are turning to tools like Flick to pre-schedule content across platforms and back everything up before TikTok takes it all away.

The message is clear: if you rely on TikTok, you need a backup plan.

Because at any moment, a single emoji or comment like “how” could erase everything you’ve built.

Want to stay ahead of unpredictable platform changes?

Start using Flick to schedule and protect your content — across TikTok, Instagram, and more — without fear of getting wiped.

Also consider setting up your subscriber base with Systeme IO so you’re not at the mercy of one app’s broken moderation.